Each use case offers a real-world example of how companies are taking advantage of data insights to improve decision-making, enter new markets, deliver better customer experiences, and increase their profit margin of course. Below the six industries with use cases related with it.

Manufacturing

- Predictive maintenance

- Operational Efficiency

- Production Optimization

Retail

- Product Development

- Customer Experience

- Customer Lifetime Value

- The in-store shopping experience

- Pricing Analytics and Optimzation

Healthcare

- Genomic Research

- Patient Experience and Outcomes

- Claims Fraud

- Healthcare Billing Analytics

Oil and Gas

- Predictive Equipment Maintenance

- Oil Exploration and Discovery

- Oil Production Optimization

Telecommunication

- Optimize Network Capacity

- Telecom Customer Churn

- New Product Offerings

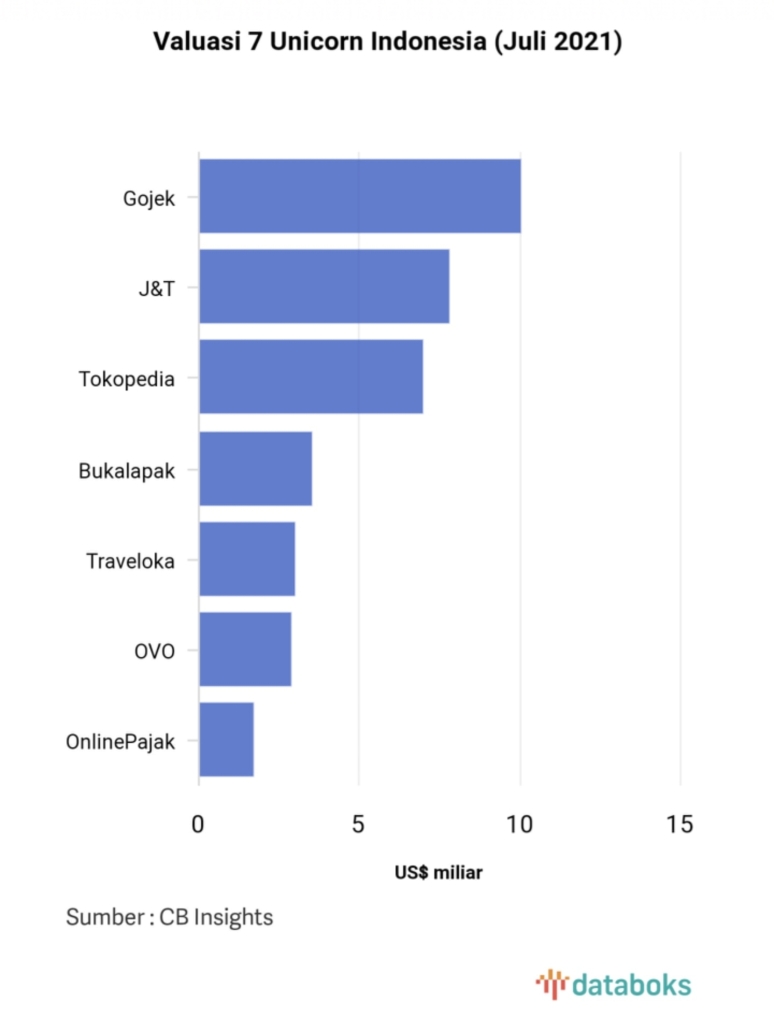

Financial Service

- Fraud and Compliance

- Drive Innovation

- Anti Money Laundring

- Financial Regulatory and Compliance Analytics

The financial services industry is up against entire expert teams. Using big data tools, companies can identify patterns that indicate fraud, discrepency, and aggregate large volumes of information to streamline regulatory reporting. To identify potential fraud patterns, companies will need to sift through a large volume of data. ( see ELK : https://tifosilinux.wordpress.com/2019/03/11/debugger-on-elk/ or https://tifosilinux.wordpress.com/2020/10/12/a-fluent-performance-by-fluentd/ )